Introduction

In a world saturated with screens, the digital image is omnipresent: televisions, smartphones, billboards, or even monumental video walls. Each display technology – LCD, LED, OLED – has its own architecture, but all rely on the same fundamental unit: the pixel, that tiny point of light which, multiplied endlessly, composes our visual universes. Today, application projects no longer merely display static images; they manipulate video streams in real time, captured by cameras, modified on the fly, transformed according to the needs of the user or the creator. This ability to intervene directly in the video stream, to reshape each image layer by layer, opens the door to unprecedented interactive experiences and limitless creativity. But what really happens beneath the surface, when one decides to modify a video stream coming from a camera? How does the architecture of the pixel become the playground of developers?

Why Control Every Pixel?

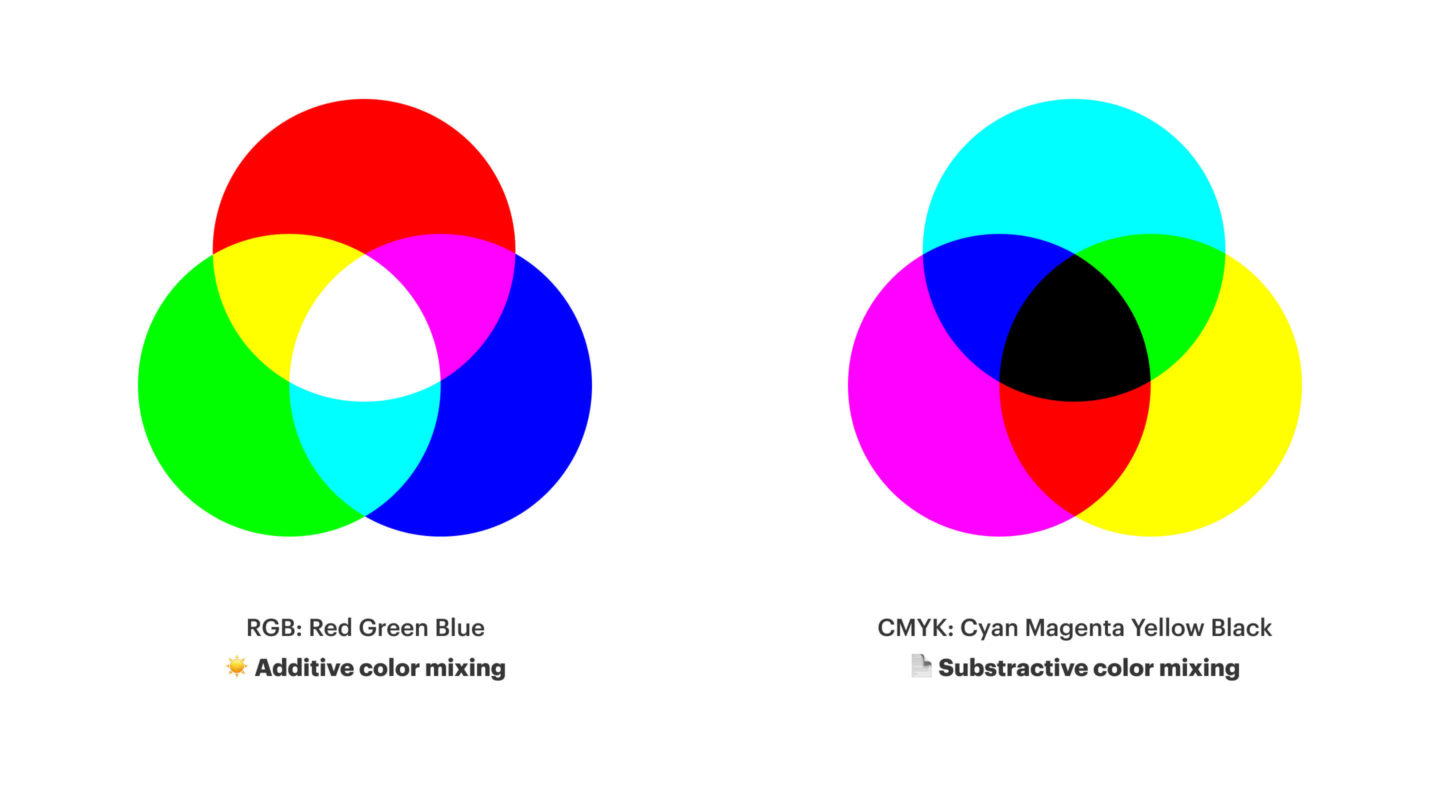

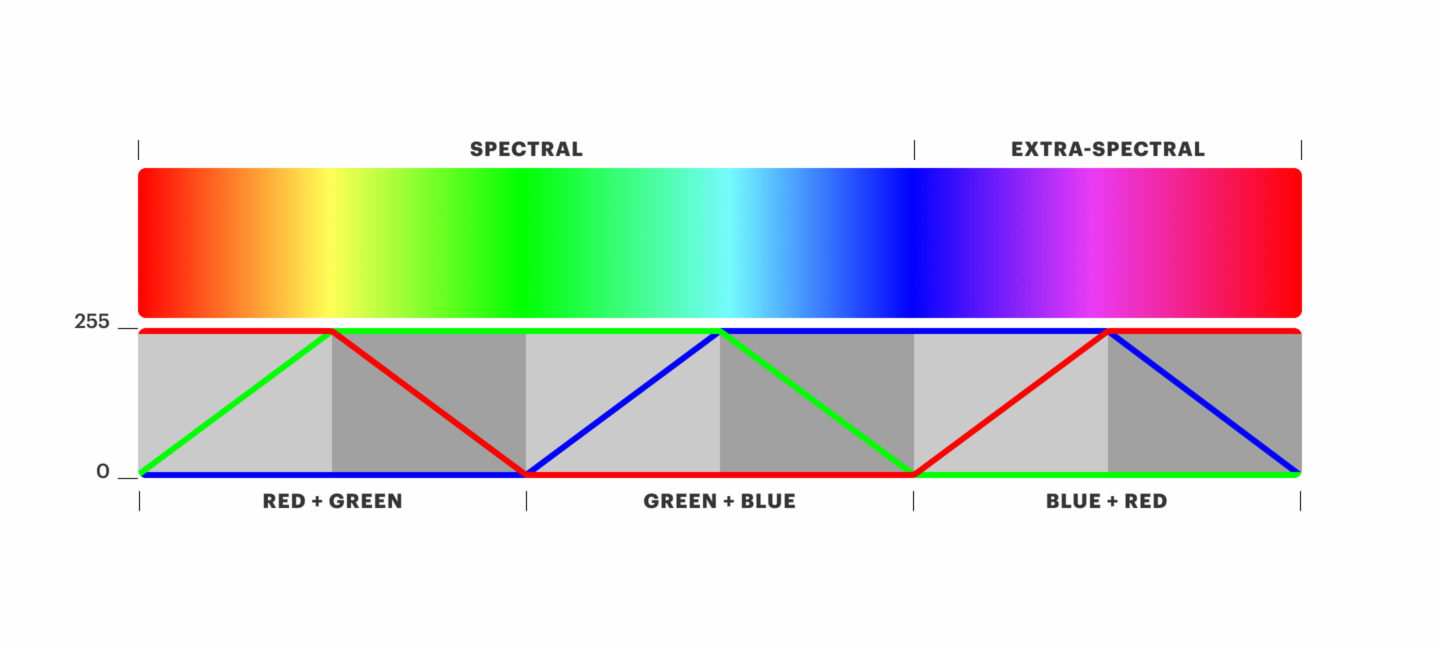

Every image displayed on a screen is composed of millions of pixels. Each of them contains information about colour and brightness. But unlike printed images that use the CMYK model (Cyan, Magenta, Yellow, Black), screens work in RGB with the following colours: Red, Green and Blue. The colour of a pixel is therefore defined by three values between 0 and 255. This so-called “additive” combination makes it possible to reproduce a wide range of colours. If the RGB code is R0, G0, B0 we are looking at a “black” pixel. If R255, G255, B255, then we have a white pixel.

This distinction is essential for understanding how filters and graphic effects work.

Most software programs allow pixels to be manipulated in bulk. Whether it’s PowerPoint displaying geometric shapes or photo editing tools using digital brushes to modify colours.

However, it is sometimes necessary to manipulate these pixels one by one in order to create advanced visual effects and improve display quality. This technique is widely used in the development of immersive video games, CAD and modelling for instance.

But how can we manage to act on every pixel of every image

OpenGL : The Tool for Manipulating Every Pixel

OpenGL (short for Open Graphics Library) is a key technology that allows direct interaction with the graphics card and control over visual rendering. It is present on smartphones, computers and even browsers. The goal is to enable the efficient and optimised design of images.

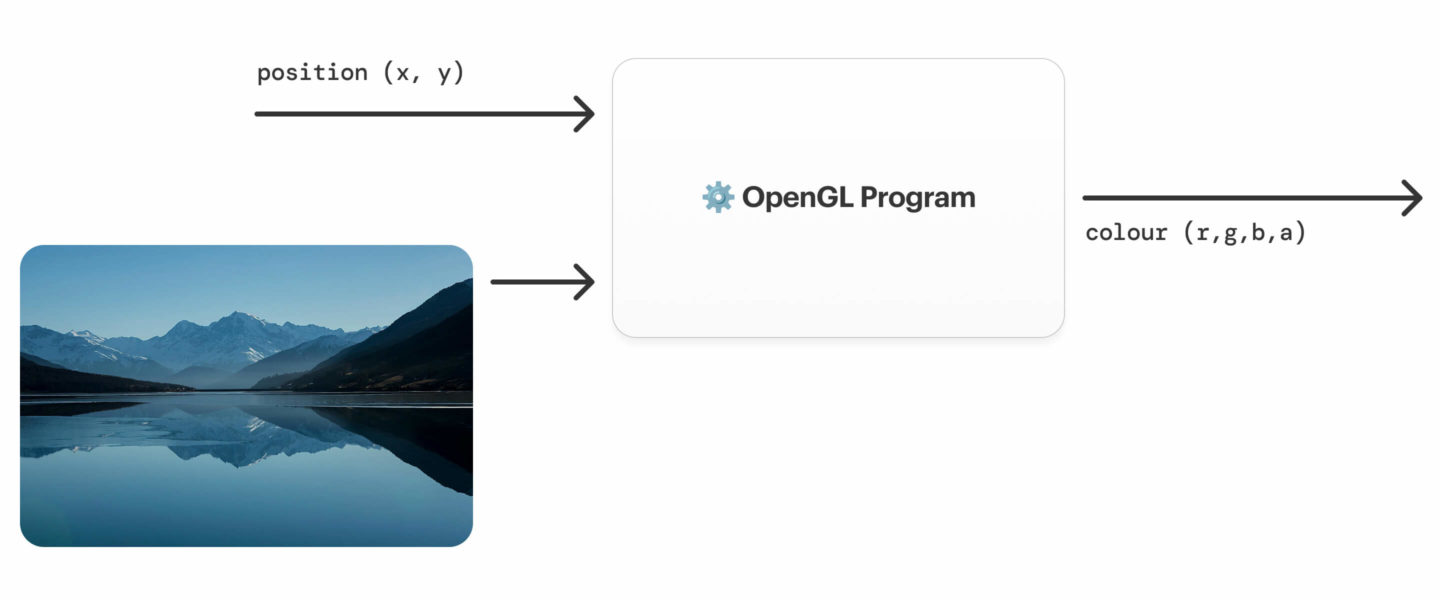

For every “frame” and every pixel, OpenGL provides two pieces of information: the position of the pixel and the current image. An algorithm is written to calculate the colour associated with this pixel. The calculation takes into account the position of the pixel and the other colours in the image.

In the “Vision[s]” project (an augmented reality app that simulates visual impairment), OpenGL is used to modify the camera’s video stream in real time. By inserting OpenGL programs into an application coded in Flutter, specific filters were applied before displaying the image on the screen.

You can thus discover in real time how the person’s condition affects their vision.

Using OpenGL: Some Filter Examples

Black and White Filter

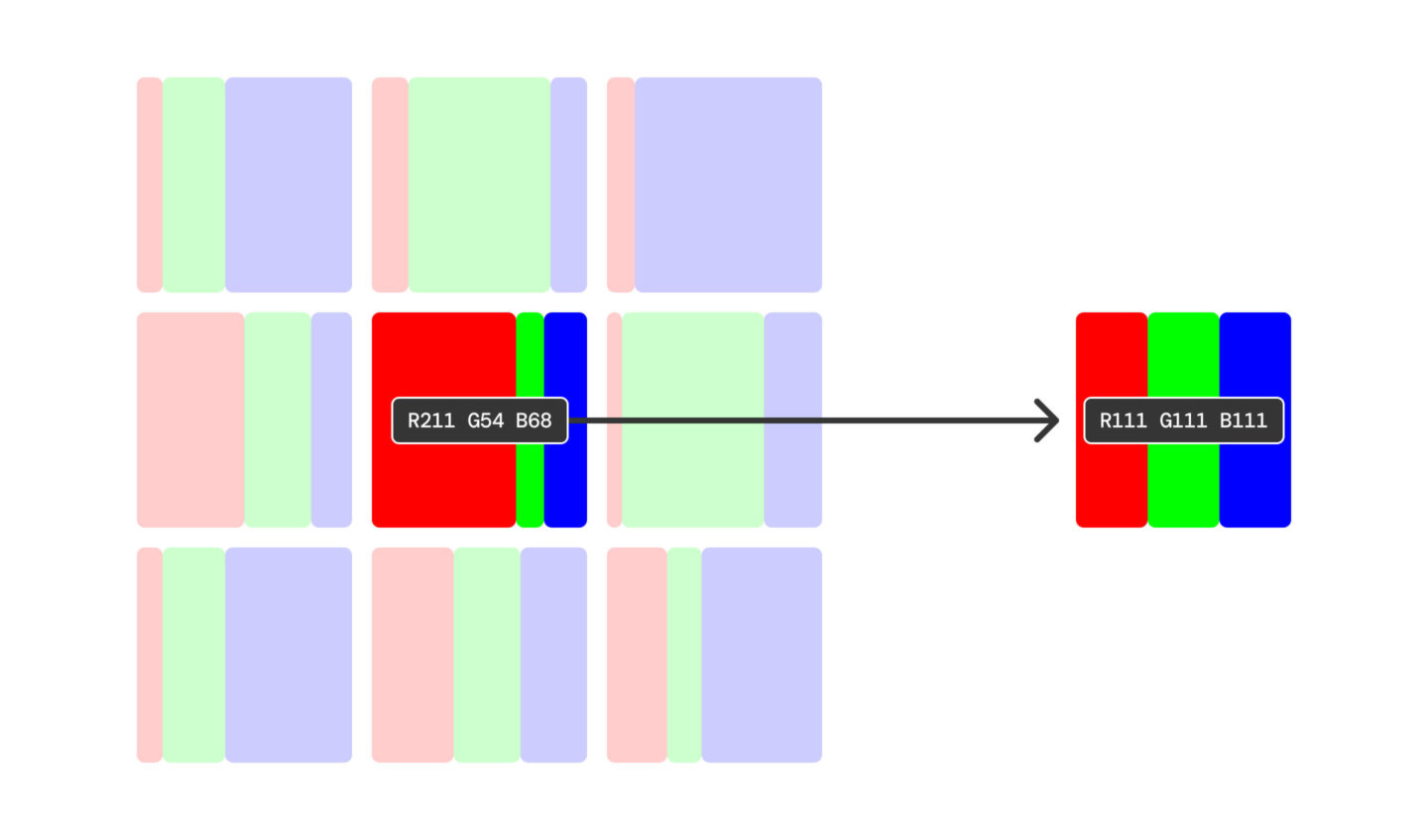

To obtain a black and white image, simply calculate the average of the three RGB values, and apply this result to all three components:

Example:

If one wants to transform a pixel of initial colour “purple” with the following values: R=211, G=54, B=68 into a “grey”, it is enough to calculate the average value: (211 + 54 + 68) / 3 = 111. Its new code will therefore be R=111, G=111, B=111.

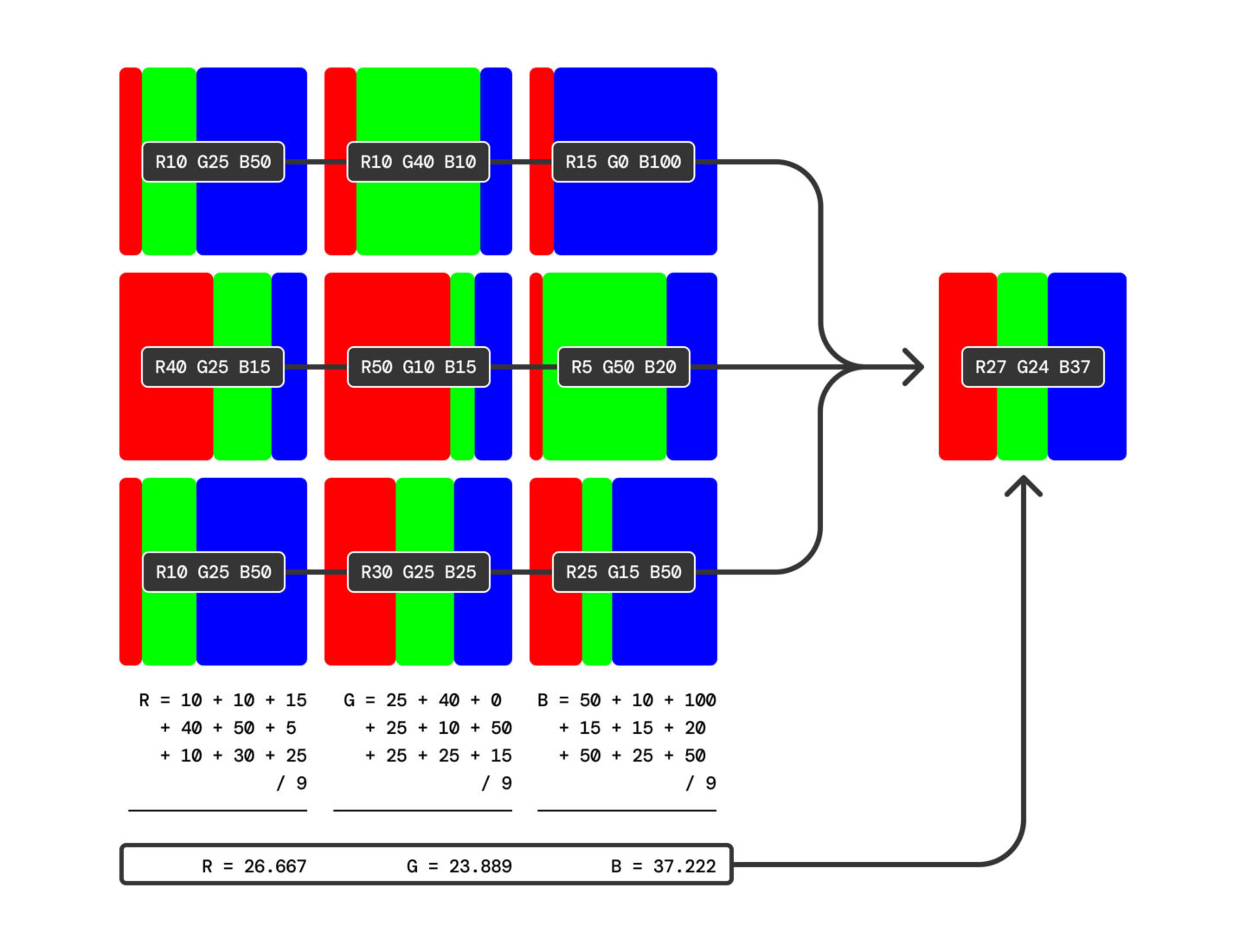

Blur Effect

A blur effect is achieved by calculating the average of neighbouring pixels, for each colour. The more adjacent pixels included in the calculation, the more pronounced the blur.

However, this technique requires many calculations: a simple blur effect can demand several dozen multiplications and additions per pixel, making its execution costly in terms of performance. That’s why optimising these calculations remains a major challenge in computer graphics.

A simplified example of a calculation would be the following. But in reality, one must consider the other pixels and weigh them according to position and priority.

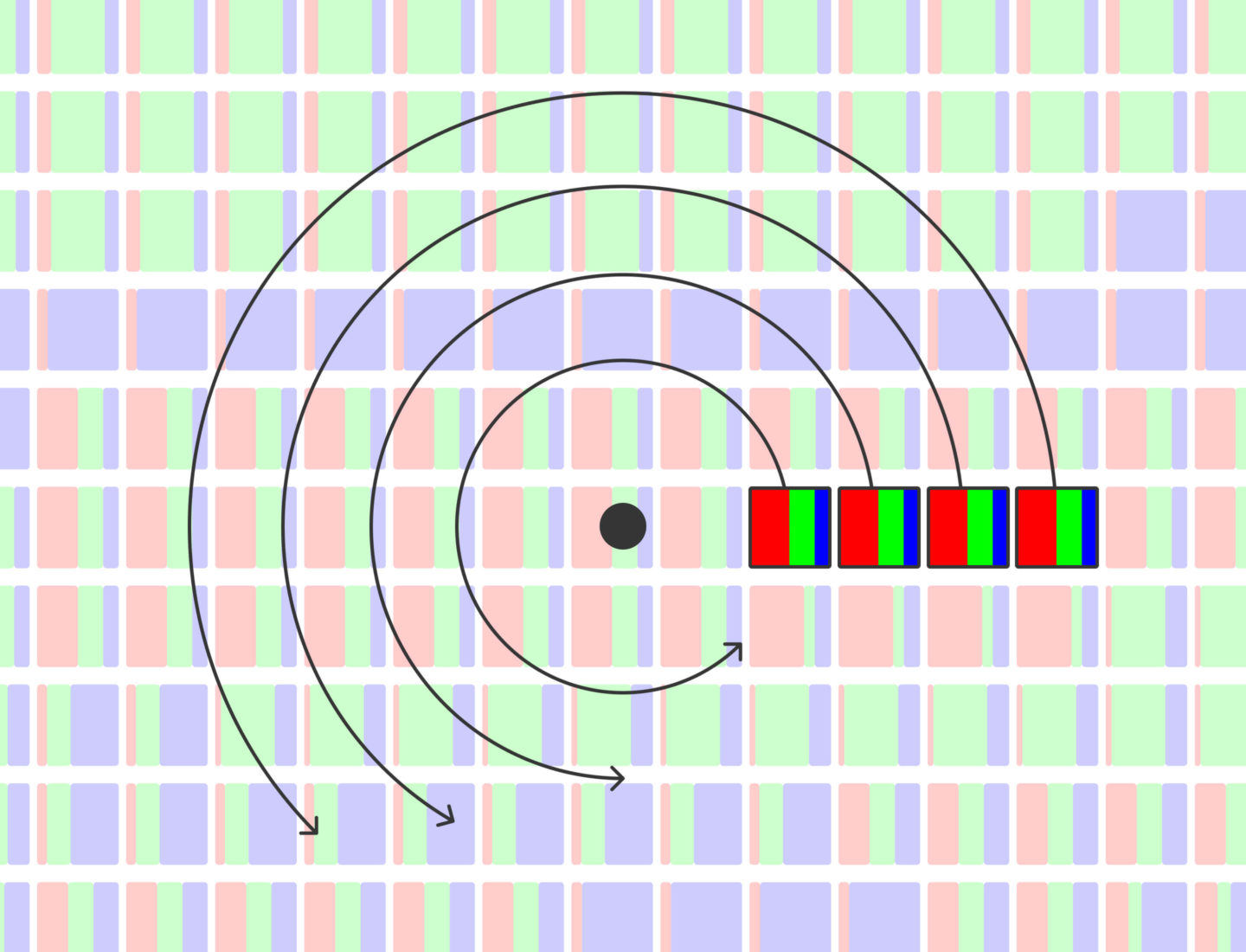

Central Spiral

In the context of simulating a visual impairment such as AMD (Age-related Macular Degeneration), the Vision[s] application uses a spiral effect. The idea is to rotate each pixel based on its distance from the centre of the image. The closer a pixel is to the centre, the greater the angle of rotation applied, thus giving an impression of visual distortion. Discover the final result in images directly on the Jules-Gonin Eye Hospital website by following this link.

Conclusion : Towards complete mastery of graphic rendering

Behind every displayed pixel lie thousands of lines of code that determine its appearance. OpenGL, via its programs (“shaders”), allows precise definition of how each pixel should be displayed in real time. Whether to create impressive visual effects in video games, optimise image processing, or simulate visual impairments, pixel manipulation is a fascinating field where performance and creativity meet.

To get started in the art of pixel manipulation, know that libraries and graphics engines use these principles to generate advanced effects such as water, fire or dynamic lighting. By delving deeper into OpenGL and its shaders, we can completely rethink how images are displayed, and pave the way for ever more immersive visual innovations.

Need support? Get in touch with us!